|

TRANSLATE THIS ARTICLE

Integral World: Exploring Theories of Everything

An independent forum for a critical discussion of the integral philosophy of Ken Wilber

Donald J. DeGracia

Donald J. DeGracia, Ph.D., is Associate Professor at the Wayne State University Department of Physiology. Dr. DeGracia studies the mechanisms of cell death following brain ischemia and reperfusion. This clinically relevant research models brain injury that occurs following stroke or following resuscitation from cardiac arrest. The main focus of this work is the causes and consequences of reperfusion-induced inhibition of protein synthesis. See also his metaphysical website and his blog dondeg.wordpress.com.. “Brief” Note to

Andrew Smith

Donald J. DeGracia

My purpose was... to contrast the general idea of assigning probabilities to complex systems... as opposed to taking a network approach to these problems.

While it is unclear to me if there is a general criticism of my article, I would like to thank Mr. Smith for commenting in his article Many kinds of chance, many kinds of necessities on my short article Evolution: chance or dynamics? I acknowledge up front I did not read all the articles on Integral World pertaining to Mr. Wilber's (perhaps unfortunate) association of Eros with evolution and so thank him for pointing to his excellent summary of additional ideas and information. I would here like to: (1) address a couple comments raised in his article by way of point of clarification and (2) make a couple comments on his article Does evolution have a direction?

I. Points of Clarification.

Mr. Smith noted:

“He (me) seems to be conflating the two key elements of Darwinism—random variation and natural selection”.

However, I did not talk about natural selection, which is the process whereby a specific adaptation provides some type of increased chance at reproductive success at the individual or species level. Nor did I address the evolutionary concept of random variation per se, which term refers to the (generally Gaussian) distribution of traits in a reproductive community. My purpose was much more circumscribed and sought to contrast the general idea of assigning probabilities to complex systems such as a protein molecule or random genetic mutations in an organism, as opposed to taking a network approach to these problems. In fact, my article had little to say about biological evolution per se, and was meant more to expose the reader to basic concepts in network dynamics (and point them to Sui Huang's excellent 2004 paper) and how these may contribute to conversations about complex systems, including biological evolution.

Mr. Smith then notes:

“In fact, there is so much interaction or potential for interaction between random variation and constraint-driven evolution that it may not be meaningful to argue that one or the other is more important.”

To a practicing scientist, if there are two or more specific mechanisms, one would seek to determine the relative contribution of each to what ever system is under study. If two ostensibly different mechanisms turn out to the same, then that would also be useful information. I think it is abundantly clear that random variation and constraint-driven [e.g. “intrinsic constraints”, to use Huang (2004)'s term] are in fact two specific mechanisms, so the issue is one of determining the relative contributions of each to say, biological evolutionary processes. Consider Huang (2004) statements on this matter:

“It is important to note that the Type I (selection by variation) and Type II (intrinsic constraints) mechanisms are not mutually exclusive, but are consistent with each other and can cooperate in some rather convoluted way. For example, a particular intrinsically robust structure, such as the fractal branching patterns of pulmonary bronchi, or a network motif in molecular networks…, may simply have been exploited— because they happen to be advantageous — rather than created by natural selection. Variability and selection pressure may not have been strong enough to mould such structures from scratch (e.g. because it might take too long to sample the configuration space)… In other words, functional adaptation may be a byproduct of an inherently robust process that creates structure based on internal design principles. Selection then does the fine-tuning.” [Parenthetical comments mine].

Here, Huang is clearly suggesting a lesser role for variation by selection and a greater role for intrinsic constraints in the sculpting of new species over time. Thus, it is meaningful to distinguish the relative contributions of each of these mechanisms. Just because the interactions are both numerous and complex does not mean we would wish to ignore or confuse them. It only means it will keep scientists busy for some time to work out the details (which is a good thing from a career perspective!).

Finally, Mr. Smith states:

“Each network represents the highest, most complex form of organization on its particular level. Its formation is critical to the emergence of a new, higher-level holon, beginning the process of socialization again.”

This statement may indicate that there was some degree of Kuhnian[1] “communication breakdown” between myself and Mr. Smith. Mr. Smith's comment suggests a much broader framework than was my desire to discuss. Again, my article was meant to simply convey how networks are providing a new technical framework to allow scientists to tackle complex systems. There was no implication on my part of any broader implications, except perhaps my statements about the general role of mathematics in describing physical reality.

II. Brief Comments on Mr. Smith's Work

Here I only wish to make brief comments on Mr. Smith's article. He states:

“I define [complexity] in terms of the number of different states, or possibilities, that a system (a living thing or a machine) can exist in: the more states, the greater the complexity. This definition of complexity is, I believe, reasonably close to more precise definitions based, for example, on the number of computational steps required to create a system (Chaitin 1973; Bennett 1988; Lloyd 2007).”

Now, I have not read Mr. Smith's book, so do not know if he is aware that this is precisely Ludwig von Boltzmann's[2] famous definition of entropy:

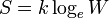

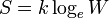

“…where k = 1.3806505(24) x 10-23 J K-1 is Boltzmann's constant, and the logarithm is taken to the natural base e. W is the Wahrscheinlichkeit, the frequency of occurrence of a macrostate[9] or, more precisely, the number of possible microstates corresponding to the macroscopic state of a system”.[3]

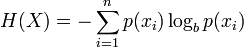

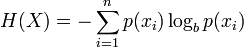

Later, Claude Shannon[4] was to use a formula of the same form to define the entropy (or complexity) of information[5]:

These are both classical definitions of systems in exactly the terms I spoke of in my previous article where specific states are assigned probability values and treated by classical probability theory. In both conceptions, the complexity of the system is defined in terms of the number of states of the systems. The entropy of a system then is related to the probability of occurrence of a given microstate. These ideas tie in to the idea of thermodynamic equilibrium such that the states with the most likely probabilities will be those that generate equilibrium in the system.

In layman's terms, this is where the idea comes from that a pot of boiling water has a chance to actually freeze. The frozen state of the molecules is one of the possible states of the system and so has a probability associated with it. However, it is a very low probability occurrence compared to the astronomical number of other states. Thus, if the system did freeze, it would be a state of low entropy, or higher organization, because it would be the manifestation of a very low probability state.

However, things have evolved considerable since Boltzmann and Shannon. Interestingly, Mr. Smith cites the work of Greg Chaitin[6], who has been one of the major players extending these ideas in interesting directions. Chaitin's work should be discussed along with Stephen Wolfram[7] because both authors have extended our understanding of complexity in interesting ways. Both Wolfram and Chaitin discuss how to understand the complexity of natural systems and their thinking is quite convergent.

Chaitin has studied (invented actually) algorithmic information theory.[8] I will only state his final result: For any given natural system drawn at random from all systems, there is no theory that can describe that system. Chaitin comes to this idea by asking (in effect): if I have any arbitrary system, what is the probability I can generate a theory that will output the given system? He then derives the result that the probability is zero. If the reader is unfamiliar with his work, much of it is free for download from his web site (footnote 6), or you can watch a cool video of him here.[9]

Wolfram has come to a similar insight via “empirical mathematics” where with sheer computational brute force he studies how mathematical systems behave.[10] He has called this (somewhat pretentiously) a “new kind of science”. [11]

The basic result of Wolfram can be stated like this: a very simple program can output a system that is essentially random. By “random” what Wolfram means is that, if you could only see the system, and not the program for which the system is the output, you would think that the system is random, and would be unlikely to determine that there was a simple program that output the system. Wolfram's buzzword is “irreducible complexity”. This is a bit of a subtle idea, but it means essentially that there is a ceiling, an upper limit on how complex a system can be, and that upper limit is when the system looks random to us. An example of such systems might be: the weather, human behavior, the color of a rock, the pattern of clouds in the sky, and so on.

I am not explaining these concepts in any great detail so the reader is encouraged to look them up on the net for additional information.

The convergence of these author's ideas lay in how they come to the same conclusions about system complexity. They both essentially state that most systems in the universe cannot be understood in a formal or theoretical fashion. Instead, the systems must be taken at face value, and we must simply admit we will never understand most natural systems by anything resembling a Newtonian determinism.

Thus, in his article, Mr. Smith states:

“Science can be succinctly defined as the attempt to identify the causes of phenomena. But the process does not stop there. When we think we know what causes a phenomenon, we attempt to use this knowledge to predict other phenomena, and ultimately, by causing them by our own actions. Indeed, scientific theories are generally validated only by the successful predictions they make.”

However, the insights provided by the above authors puts stark and severe limits on such a conception of science. Indeed, Mr. Smith's view of science harkens back to that of Karl Popper[12] and represents a pre-“post-modern” view of science. The insights of Chaitin, Wolfram, and others of their ilk (notably Stuart Kauffman[13]) give us a “post-modern” science that recognizes an essential element of unpredictability in the universe. While this appears as randomness, all of these authors stress in one form or another that this “seeming randomness” is in fact the source of creativity in the Cosmos. Kauffman even goes on to suggest (disingenuously if I might add) that such insights provide a basis for atheistic scientists to be able to have discussions with theistic religious people. Chaitin is the most interesting in this regard because he tackles the notion of random head on and shows how slippery of a concept it really is. He shows, in fact, that the term “random” does not have a definition.[14]

So, the above an in no way meant to be criticisms of Mr. Smith's fine work. It is meant to point out the efforts of other workers along these lines to show that there is an increasing convergence of thinking going on in modern science that recognizes the limits of science. Scientists themselves are coming to realize how they are a part of greater wholes, and are overcoming the (delusional?) desire to hold reality in the palm of their hands in the form of intellectual constructs.

I would like to thank Mr. Smith for engaging about these thoroughly interesting and vital ideas.

NOTES

- http://en.wikipedia.org/wiki/Thomas_Kuhn.

- http://en.wikipedia.org/wiki/Ludwig_Boltzmann.

- This is quoted from the link in note 2, and I left the other hyperlinks intact in this quote.

- http://en.wikipedia.org/wiki/Claude_Shannon

- http://en.wikipedia.org/wiki/Entropy_%28information_theory%29

- http://www.umcs.maine.edu/~chaitin/. The reader should note that Chaitin has a picture of Leibniz on his web site. Leibniz is the fellow who gave us the term “Monad”.

- http://en.wikipedia.org/wiki/Stephen_Wolfram.

- http://en.wikipedia.org/wiki/Algorithmic_information_theory.

- http://videolectures.net/ephdcs08_chaitin_lcai/. STRONGLY RECOMMENDED. Chaitin is a hoot and he is smart as they get!

- Cool Wolfram video, (just need to get past the affectations): http://mitworld.mit.edu/video/149

- http://en.wikipedia.org/wiki/A_New_Kind_of_Science.

- http://en.wikipedia.org/wiki/Karl_Popper.

- Who, interestingly is a colleague of Sui Huang. See here for a cool Kauffman video: http://www.youtube.com/watch?v=8I5mYDUARY4

- In his book Meta-math. A GREAT read—STRONGLY RECOMMENDED. http://www.amazon.com/Meta-Math-Quest-Gregory-Chaitin/dp/1400077974

|

Donald J. DeGracia, Ph.D., is Associate Professor at the Wayne State University Department of Physiology. Dr. DeGracia studies the mechanisms of cell death following brain ischemia and reperfusion. This clinically relevant research models brain injury that occurs following stroke or following resuscitation from cardiac arrest. The main focus of this work is the causes and consequences of reperfusion-induced inhibition of protein synthesis. See also his metaphysical website and his blog dondeg.wordpress.com..

Donald J. DeGracia, Ph.D., is Associate Professor at the Wayne State University Department of Physiology. Dr. DeGracia studies the mechanisms of cell death following brain ischemia and reperfusion. This clinically relevant research models brain injury that occurs following stroke or following resuscitation from cardiac arrest. The main focus of this work is the causes and consequences of reperfusion-induced inhibition of protein synthesis. See also his metaphysical website and his blog dondeg.wordpress.com..