|

TRANSLATE THIS ARTICLE

Integral World: Exploring Theories of Everything

An independent forum for a critical discussion of the integral philosophy of Ken Wilber

Stanley N. Salthe, Ph.D. Zoology, 1963, Columbia University, is Professor Emeritus, Brooklyn College of the City University of New York, Visiting Scientist in Biological Sciences, Binghamton University and Associate Researcher of the Center for the Philosophy of Nature and Science Studies of the University of Copenhagen. He is the author of Evolutionary Biology (1972), Evolving Hierarchical Systems (1985), Development and Evolution (1993), Evolutionary Systems (1998) and The Evolution Revolution (n.d.). Stanley N. Salthe, Ph.D. Zoology, 1963, Columbia University, is Professor Emeritus, Brooklyn College of the City University of New York, Visiting Scientist in Biological Sciences, Binghamton University and Associate Researcher of the Center for the Philosophy of Nature and Science Studies of the University of Copenhagen. He is the author of Evolutionary Biology (1972), Evolving Hierarchical Systems (1985), Development and Evolution (1993), Evolutionary Systems (1998) and The Evolution Revolution (n.d.).

Salthe SN (2003) Entropy: what does it really mean? Genet Syst Bull 32:5-12. Reposted with permission of the author.

Entropy: What does it really mean?Stanley N. SaltheAnalytical Preliminary

Here I will seek the full meaning of entropy by examining its four major manifestations in modern science.

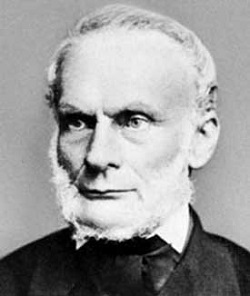

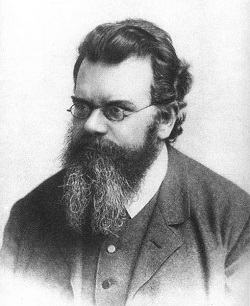

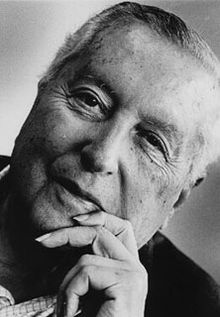

Systematicity is a concept. Concepts are systems. In my view, concepts are never fully circumscribed by explicit definitions. As different definitions of the same phenomenon accrue, it could even turn out that the historically first attempt at one winds up a “standard deviation” away from the “intersection” of all versions of a concept. Some might say that this shows that a concept evolves. I follow Foucault (1972) in seeing instead a gradual clarification of a concept that was only roughly adumbrated at first. That is, I see a development of its meaning, which therefore must be fixed from the beginning in a system, or ecology, of society-specific concepts. Fixed, but vague. The definitions we prefer in science are supposed to be explicit and crisp, so that they may be made operational when mediated by equations. But each of these may be somewhat of a falsification of the actual idea, which, if it gives rise to several definitions, must be richer, and vaguer. Here I will seek the full meaning of entropy by examining its four major manifestations in modern science: Carnot’s entropie (Carnot 1824 / 1960); Boltzmann’s most probable condition, S, usually interpreted as disorder (Boltzmann, 1886 / 1974); Prigogine’s delta S, or change in entropy (Prigogine, 1955); and Shannon’s H, or information carrying capacity (Shannon & Weaver, 1949). The “intersection” of these definitions would be close to the real meaning of entropy—but can it be stated explicitly in words? First we examine:  Rudolf Clausius (1) The Carnot-Clausius entropy—entropie—which could be defined as a measure of the negefficiency of work. Its production increases as the rate of work, or power, increases above the rate at greatest energy efficiency for the dissipative system involved. It is commonly estimated in actual cases by the heat energy transferred from an available gradient to the environment as a result of doing work. I believe that, more generally, other waste products produced during consumption of an energy gradient should be taken (since they are of lesser energy quality than the original gradient) to be part of the entropy produced by the work, along with the heat generated. For an obvious example, sound waves emanating from some effort or event are not likely to be able to be tapped as an energy gradient before being dissipated. Nor would the free energy emanating from a natural open fire (here we have at least one exception— the opening of pine cones in trees adapted to fire disclimaxes). So,I suggest that all energy gone from a gradient, and not accounted for by what is considered to be the work done by its consumer after some time period, should be taken as entropic, if not as entropy itself, which is usually held to be represented only by the completely disordered energy produced by frictions resisting the work. That is, any diminution of energy from a gradient during its utilization for work that cannot be accounted for by that work would be interpreted as part of its dissipation. This approach seems to me to follow from Boltzmann’s interpretation of entropy as disorder (see below) since the lesser gradients produced are relatively disordered from the point of view of the consumer responsible for producing them (again, see below). This approach would also allow us in actual cases to use gradient diminution as a stand in for the entropy produced, given that we know the amount of energy that went into work (the exergy). This aspect of the entropy concept—also called physical entropy—has traditionally been constructed so as to refer exclusively to equilibrium conditions in isolated systems. Only in those conditions might it be estimated, from the increase in ambient temperature after work has been done. Hence one hears that entropy is a state function defined only for equilibrium conditions in isolated systems. This stricture follows from entropie’s technical definition as a change in heat from one state to another in an isolated system when the change in state is reversible (i.e., at equilibrium, irrespective of the path taken to make the change). The present approach takes this as an historico- pragmatic constraint on the physical entropy concept making it applicable to engineering systems, and therefore, from the point of view of seeking the broader meanings of the concept, merely one attempt among others. One point to note about the engineering version is that entropy is therein measured as a change in temperature divided by the average temperature of the system prior to the new equilibrium. This means that a given production of heat in a warmer system would represent less entropy increase than the same amount produced in a cooler system. Thus, while hotter systems are greater generators of entropy, cooler ones are more receptive to its accumulation. Since the production of entropy during work can theoretically be reduced arbitrarily near to almost none by doing the work increasingly slowly (up to the point where it becomes reversible), physical entropy is the aspect of the entropy concept evoked by the observation that haste makes waste. Of course, it is also the aspect of the concept evoked by the fact that all efforts of any kind in the material world meet resistance, (which generates friction, which dissipates available energy as heat).  Ludwig Boltzmann (2) The Boltzmann (or statistical) entropy, S, which is generally taken to be the variety of possible states, any one of which a system could be found to occupy upon observing it at random. This is often called the degree of disorder of a system. It is usually inferred to imply that, at equilibrium, any state contributing to S in a system could be reached with equal likelihood from any other state, but this is not the only possibility, as it has been pointed out that the states might be governed instead by a power law (Tsallis entropy). In principle, S, a global state of a system, spontaneously goes to a maximum in any isolated system, as visualized in the physical process of free diffusion. However, since it is an extensive property, it may decline locally providing that a compensating equal or greater increase occurs elsewhere in the system. At global equilibrium such local orderings would naturally occur as fluctuations, but would be damped out sooner or later unless some energetic reinforcement were applied to preserve them. This aspect of the entropy concept establishes one of its key defining features— that, in an isolated system, if it changes it is extremely likely to increase because, failing energy expenditure to maintain them, a system’s idiosyncratic, asymmetrical, historically acquired configurations would spontaneously decay toward states more likely to be randomly accessed. This likelihood is so great that it is often held that S must increase if it changes. We may note in this context that, as a consequence, heat should tend to flow from hotter to cooler areas (which it does), tending toward an equilibrium lukewarmness. This would be one example of a general principle that energy gradients are unstable, or at best, metastable, and will spontaneously dissipate as rapidly as possible under most boundary conditions. Entropie in the narrow(est) sense would be assessed by the heat produced during such dissipation when it is assisted by, or harnessed to, some (reversible) work. But, then, will the negefficiency of work also spontaneously increase? Or, under what circumstances must it increase? With faster work; but also with more working parts involved in the work. Therefore, as a system grows, it should tend to become less energy efficient, and so grow at increasingly slower specific (per unit) rates. This is born out by observations on many kinds of systems (Aoki, 2001). But then, must dissipative systems grow? It seems so (Salthe, 1993; Ulanowicz, 1997). This is one mode by which they produce entropie, which they must do. Boltzmann’s interpretation of physical entropy as disorder assumes major importance in the context of the Big Bang theory of cosmogony, because that theory has the Universe producing, by way of its accelerating expansion, extreme disequilibrium universally. This means among other things, that there will be many energy gradients awaiting demolition, as matter is the epitome of non-equilibrial energy. This disequilibrium would be the cause of the tendency for S to spontaneously increase (known as the Second Law of thermodynamics), as by, e,g., wave front spreading and diffusion. Note again that the engineering model of physical entropy has entropy increasing more readily (per unit of heat produced) in cooler systems. This suggests that the cooling of the universe accompanying its expansion has increased the effectiveness of the Second Law even as the system’s overall entropy production must have diminished as it cooled. Note also that one of the engineering limitations on the entropy concept can be accommodated very nicely in this context by making the—not unreasonable, perhaps even necessary—assumption that the universe is an isolated system (but, of course, not at equilibrium, at least locally, where we observers are situated). Statistical entropy, S, is the aspect of the entropy concept evoked by the observation that, failing active preservation, things tend to fall apart, or become disorderly, as well as the observation that warmth tends to be lost unless regenerated (a point of importance to homeotherms like us).  Ilya Prigogine (3) Next we consider the Prigogine (1955) change in entropy, delta S, of a local system, or at some locale. Delta S may be either positive or negative, depending upon relations between S production within a system (which, as the vicar of the Second Law in nonequilibrial systems, must always be positive locally within an isolated system), and S flow through the system. In general, because of fluctuations, a local system would not be equilibrated even within global equilibrium conditions. So, if more entropy flows out of a system than is produced therein because some is incoming and flowing through as well, then delta S would be negative, and the system would be becoming more orderly (negentropic), as when a dissipative structure is selforganizing. This can be the case when the internal work done by a system (producing entropy during work while consuming an energy gradient) results in associated decreases in other embodied free energies (which decreases also produce entropy, but not from the gradient powering the work), followed by most of the entropy flowing out (as heat and waste products of lesser energy quality than the precursors). More generally, if entropy flows through a system, and some is produced therein as well, then more would tend to flow out than was produced internally (if the system remains intact), and so its entropy change would be negative. In this case, the system would be maintaining itself despite taking in entropy (as, e.g., heat, buffeting, toxins and free radicals), which could have disrupted it. The work done internally maintains the system. Note that this gives us the simplest model of a dissipative structure. In it, a managed flow of entropy intruding from outside the system is a necessary condition to allow the entropy produced inside (during the work of entropy management), when added to that flowing through, to promote self-organization. That is, a flow through of entropy is a necessary prerequisite for self -organization. This is crucial for understanding the origin of life. A proto living system must therefore be located within a larger scale dissipative structure, driven by its flows. When such a protosystem finds a way to temporarily manage impinging disorder, it can self-organize into a system that, by producing its own disorder from available gradients during internal work, comes to be able to more effectively manage impinging disorder by way of the forms produced by that internal work. Delta S is an extensive property of a given locale or subsystem within a system wherein, globally, S must increase. I take much of delta S to represent local changes in internal free energy, but it could also signal changes in system / energy gradient negefficiency by way of configurational alterations in the work space, or, put in a more Prigoginean way, changes in energy flows relative to the forces focused from the energy gradients being consumed. The necessity for positive local entropy production in a dissipative structure is the aspect of the entropy concept evoked by the observation that one has to keep “running” (producing entropy internally and shipping it out) in order just to maintain oneself as is. Or, that making and maintaining a system is always taxed by having to expend some available energy on entropy—which, of course connects to the observation, relative to entropie, that all efforts meet resistance. It has been pointed out to me that the units of delta S are different from those of entropie. Here is a good place to remind the reader that I am seeking vaguer definitions. I seek a concept that would define both a substance and its changes, being vaguer than both, as in {basic concept {substance {changes in substance}}}. .jpg) Claude Shannon (4) Finally there is the Shannon, or informational, entropy, H, which is the potential variety of messages that a system might mediate (its capacity for generating variety), or more generally, the uncertainty of system behavior. The exact mathematical form of H is identical to that of S, but with physical constraints removed, thereby generalizing it. Thus, {H {S }}. That is, physical entropy, when interpreted statistically as disorder (S), is a special kind of entropy compared to informational entropy. Disorder in the physical sense is just the variety of configurations a system can be observed to take over some standard time period, or {variety {disorder }} = {variety per se {variety of accessed physical configurations}}. Note that one of the added constraints at the enclosed level here is that disorder will spontaneously increase, so we might better have written ‘disordering’ as in {variety {disorder {disordering }}. It is often asserted that physical entropy in the negefficiency sense (entropie) has nothing whatever to do with H. In my view, negefficiency measures the lack of fittingness between an energy gradient and its consumer, and therefore must be informational in character. That is, as negefficiency increases, the lack of information about its gradient in a consumer is increasing, and therefore the unreliability of their ‘fit’ must increase. This means that as a gradient is dissipated increasingly rapidly, the information a system has concerning its use to do work, embodied in its forms and/or behavior, becomes increasingly uncertain in effect. Since natural selection is working on systems most intensely when they are pushing the envelope (Salthe, 1975), efficiency at faster rates could evolve via selection in more complex systems, modifying the information a system has concerning its gradients. But, at whatever rate a system establishes its maximum efficiency, (a) work rates faster than that will still become increasingly inefficient, and (b) now slower rates (away from the reversible range, of course) would be less efficient than the evolved optimum rate. In the Negentropy Principle of Information (NPI) of Brillouin (1956), information, (I), is just any limitation on H, (with I + H = k), and is generated by a reduction in degrees of freedom, or by symmetry breaking. On the other hand, H is also generated out of information, most simply by permutation of behavioral interactions informed by an array of fixed informational constraints. I take information to be any constraint on the behavior of a system, leaving a degree of uncertainty (H) to quantitatively characterize the remaining capacity of the system for generating behavior, functional as well as pathological (characteristic as well as unusual). So, H and I seem to be aspects of some single “substance”. I suggest that this “substance” is configuration, which would be either static (as part of I) or in motion, which, in turn, would be either predictable (assimilable to I) or not (as H). A point of confusion between H and S should be dealt with here. That is that one must keep in mind the scalar level where the H is imputed to be located. So, if we refer to the biological diversity of an ecosystems as entropic, with an H of a certain value, we are not dealing with the fact that the units used to calculate the entropy— populations of organisms—are themselves quite negentropic when viewed from a different level and/or perspective. More classically, the molecules of a gas dispersing as described by Boltzmann are themselves quite orderly, and that order is not supposed to break down as the collection moves toward equilibrium (of course, this might occur as well in some kinds of more interesting systems). It must be noted that, as a bonafide entropy should, H necessarily tends to increase within any expanding (e.g., the Big Bang) or growing system (Brooks and Wiley, 1988), as well as, (Salthe, 1990), in the environment of any active local system capable of learning about its environment, including its energy gradients. The variety of an expanding system’s behavior (H) tends to increase toward the maximum capacity for supporting variety of that kind of system. This may be compared to S, which tends to increase in any isolated system. I assume the Universe is both isolated and expanding, and therefore S and H both must tend to increase within it. H materially increases in the Universe basically because, as it expands and cools at accelerating rate, some of the increasingly nonequilibrium energy precipitates as matter. Note that, with information, (I), tending to produce S by way of mediating work (no work without an informed system to do it), it does this only by having diminished H, (I = k - H), so that, when a system does internal work it is, while becoming more orderly, shedding some of its own internal informational entropy (H) for externalized physical entropy (S). Informational entropy is the aspect of the entropy concept evoked by the observation that the behavior of more complicated (information rich) systems (say, a horse) tends to be more uncertain than that of simpler systems (say, a tractor). This is so, generally, even if a more complicated system functions with its number of states reduced by informational constraints, because one needs to add in unusual and even pathological states to get all of system H, and these should have increased as the number of functional states was restricted by imposed information. With its habitual behavioral capacity reduced, a system could appear more orderly to an observer, who could obtain more information about it. As a coda on informational entropy, we should consider whether Kolmogorov complexity (Kolmogorov,1975) is an entropy. Here, a string of tokens is complex if it cannot be generated by a simpler string. As it happens, sequences that cannot be reduced to smaller ones are random mathematically. More generally, something that cannot be represented by less than a complete duplicate of itself is random / complex. But is it “entropic”? Unless it tended irreversibly to replace more ordered configurations, I would say ‘no’. Not everything reckoned random can be an entropy, even though entropic configurations tend to be randomized. The entropy concept is best restricted to randomization that must increase under at least some conditions. Preliminary AnalysisNow, is the unpredictability of complicated systems related to the fact that one needs to keep running just to stay intact, and are these related to the fact that things have a tendency to disintegrate, and are these in turn related to the observations (a) that all efforts meet resistance, and (b) that haste makes waste? Put otherwise (and going the other way), is negefficiency related to disorder and is that related to variety? The question put this way was answered briefly in the affirmative above (in 4). So, we have imperfect links between consumers and energy gradients mapped to disorder. Of course, the disorder in question is then, “subjective”, in that it can characterize energy gradients only with respect to particular consumers. Ant eaters can process ants nicely, but rabbits cannot; steam engines can work with the energy freed by a focused fire, but elephants cannot. More generally, however, no fit between gradient and consumer can be perfect. Unless work is being done so slowly as to be reversible, it is taxed by entropy production. As well, when the work in question gets hastier and hastier—and most animals are fast food processors!—consumption becomes increasingly deranged, meeting greater and greater resistance. And, of course, abiotically, the dissipative structures that get most of a gradient are those that degrade it fastest. Because these have minimal form to preserve, they are closer to being able to maximize their rate of entropy production than are living things, which, hasty withal, still need to preserve their complicated forms. Haste is imposed upon living systems by the acute need to heal and recover from perturbations as quickly as possible. They are also in competition for gradient with others of their own and other kinds, and, as Darwinians tell us, they are as well in reproductive competition with their conspecifics. Abiotic dissipative structures can almost maximize their entropy production, biotics can do so only to an extent allowed by their more complicated embodiments (of course, all systems can maximize only to an extent governed by bearing constraints). In any case, I conclude that disorder causes physical entropy production as well as being, as S, an indicator of the amount produced. The disorder of negefficiency is configurational (even if the configurations are behavioral and fleeting). And so is Boltzmann’s S. Negefficiency is generated by friction when interacting configurations are not perfectly fitted, while S is generated by random walks. An orderly walk would have some pattern to it, and, importantly, could then be harnessed to do work. Random walks accomplish nothing, reversing direction as often as not. The link between physical entropy and S is that the former is generated to the degree that the link between energy gradient and consumer is contaminated by unanticipated irregularities—by disorder—causing resistance to the work being done. Conventionally, S is held to just indicate the degree of randomness of scattered particles—a result of negefficiency. My point is that randomness can be viewed also as the source of the friction which scatters the particles, as caused by disorderliness in the configurations of gradient-consumer links. I conclude that physical entropy is easily mapped to disorder, both as Boltzmann saw long ago, and in a generative sense as well. An important part of Boltzmann’s formulation is that S will increase if it changes— that is, that disorder will increase—unless energies are spent preventing that. While not conventionally thought of in this way, this is highly likely at all scales. No matter what order is constructed or discerned by what subjectivity, it will become disordered eventually, the rate being tailored to the scale of change (this fact is a major corroboration that the universe we are in must be out of equilibrium). A sugar cube will become diffused throughout a glass of water in a period of weeks, while, absent warfare, a building will gradually collapse over a period of decades, and a forest will gradually fail to regenerate over a period of eons. And so we can indeed match the notion that things tend to fall apart unless preserved with the negefficient notion that all efforts at preservation meet resistance, which is in turn linked to haste makes waste. And the necessary falling apart is, of course, easily related to the notion embodied in Prigogine’s delta S, that one must keep running just to stay put. And, of course the harder one runs, the less efficient is the running! Furthermore, the more complicated a running system is, the more likely some of its joints will fluctuate toward being more frictional at any given moment. Furthermore, because of growth, all systems tend to become more complicated The inability to work very efficiently at significant loadings makes our connection to H, informational entropy. I suggest, as above, that inefficiency is fundamentally a problem of lack of mutual information between consumer and energy gradient, a problem that increases with hasty work as well as with more working parts. It should be noted again that the acquisition of information by carving it out of possibilities is itself taxed by the same necessary inefficiency. The prior possibilities are the embodiment of H, which can be temporarily reduced by the acquisition of information. As possibilities are reduced, system informational entropy is exchanged for discarded physical entropy by way of the work involved. Learning (of whatever kind in whatever system) is subject to the same negefficiency that restrains all work. So, we need a word that signifies a general lack of efficiency, increasing disorder, and the need to be continually active, with fluctuating uncertainty. Some word that means at the same time increasing difficulty, messiness, uncertainty (confusion) and, yes, weariness! That word is ‘entropy’. And I would guess the concept it stands for has still not been fully excavated. I thank John Collier and Bob Ulanowicz for helpful comments on the text. ReferencesAoki, I. 2001. Entropy and the exergy principles in living systems. in S.E. Jørgensen, editor. Thermodynamics and Ecological Modelling. Lewis Publishers, Boca Raton, Florida, USA. Boltzmann, L. 1886/1974. The second law of thermodynamics. Reprinted in B. McGuinness, editor. Ludwig Boltzmann, Theoretical Physics and Philosophical Problems. D. Reidel, Dordrecht. Brillouin, L. 1962. Science and Information Theory. Academic Press, New York. Brooks, D.R. and E.O. Wiley, 1986. Evolution As Entropy: Toward a Unified Theory of Biology. University of Chicago Press, Chicago. Carnot, S. 1824 / 1960. Reflections on the motive power of fire, and on machines fitted to develop that power. Reprinted in E. Mendoza, editor. Reflections on the Motive Power of Fire and Other Papers. Dover Publications, New York. Foucault, M. 1972. The Archaeology of Knowledge. Tavistock Publications, London. Kolmogorov, A.N. 1975. Three approaches to the quantitative definition of information. Problems of Information Transmission 1: 1-7. Prigogine, I. 1955. Introduction to Thermodynamics of Irreversible Processes. Interscience Publishers, New York. Salthe, S.N., 1975. Problems of macroevolution (molecular evolution, phenotype definition, and canalization) as seen from a hierarchical viewpoint. American Zoologist 15: 295-314. Salthe, S.N., 1990. Sketch of a logical demonstration that the global information capacity of a macroscopic system must behave entropically when viewed internally. Journal of Ideas 1: 51-56. Salthe, S.N., 1993. Development and Evolution: Complexity and Change in Biology. MIT Press, Cambridge, MA. Shannon, C. E., and W. Weaver. 1949. The Mathematical Theory of Communication. University of Illinois Press, Urbana, Illinois. Ulanowicz, R.E., 1997. Ecology, The Ascendent Perspective. Columbia University

Press, New York.

|